Elon Musk and Stephen Hawking are worried the human race might be replaced by robots.

A couple of problems with that:

- They never said that, until the news got exaggerated (to put it mildly) by clickbait science & tech tabloids.

- Although they did not say it, others did – and I disagree.

- It might be a good thing.

From the top: such scandalising journalism is enormously irresponsible, because it warps public perception of science and technology into a cartoon, increasing the risk that important research and groundbreaking discoveries with incalculable benefits to human knowledge and well-being will (again) be opposed by misinformed screaming baboons.

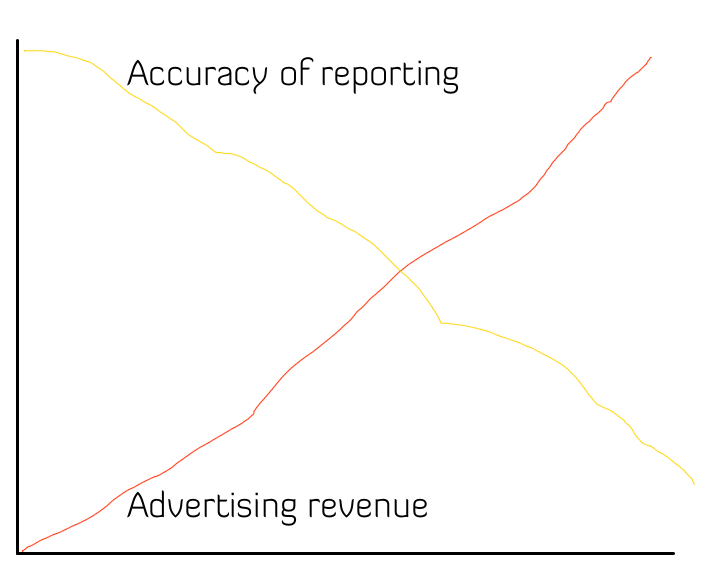

There is a gigantic difference between “AI researchers agree we need to develop this in beneficial and controlled ways”, which is what the open letter actually says, and “TWO GREATEST MINDS ALIVE SAY ROBOTS WILL KILL US ALL!”, which is what the headlines said. Because the first law of internet journalism:

This is literally why we have a public that thinks particle colliders can open black holes and radioactive spiders can give humans superpowers.

Now, onto the second point.

Others worry about a robot apocalypse, and Rogue AI is a common trope in fiction – there is SkyNet, HAL 9000, GLaDOS, the Borg, Cylons, the Geth, and of course the seminal Rossum’s Universal Robots – a legion of robotic adversaries squeaking about in our cultural imagination.

The inability to reliably differentiate between reality and cultural imagination, is, of course, a capitalised Problem in italics. Exhibit A.

An important distinction

To be able to think about the issue clearly, it is important to keep in mind the difference between weak AI and strong AI.

Weak AI is the kind of AI you claim a washing machine you’re selling has to make it sound more sophisticated. This kind of AI is already everywhere, and several dozen of them worked together to display this article on your screen.

Strong AI is proper, self-aware* machine intelligence.

*There are enormous philosophical problems with determining sentience. From the outside, we won’t know if a general AI is a truly sentient being, or only a superlatively sophisticated simulacrum with “nobody home”, as it were. However, we aren’t even able to reliably ascertain the humanity of other humans, except by balance of probability and similarity to ourselves. This, I suspect, will be the biggest philosophical discussion in artificial intelligence going forward, and may have implications for how we think about humanity. Legally, IMO, the solution will be “if it quacks like a duck and walks like a duck, it’s a duck” – IE entities empirically indistinguishable from sentient beings will be considered sentient.

If Musk and Hawking, as well as leading names in AI research, are worried about weak AI, so am I. It’s human programming, with nothing but more human programming to prevent unintended consequences. Especially justified are worries about the development of autonomous military systems, though the dangers are limited (though real) with current technology. Still, we’d be insane not to have failsafes. However, that was never the plan.

And that’s not real, strong AI.

Strong AI is different. It is essentially a person. For starters, we don’t even know if it’s possible – our best understanding of what constitutes “personhood” and sentience is still woefully inadequate. Also, the question whether a mind can run on a non-biological “platform” is fundamental and unanswered.

A good answer, pending empirical data, is “Why shouldn’t it?”.

Until proven otherwise, Occam’s razor says there is nothing magical about the composition and circuitry of our brains, and their functions can be replicated in other materials – with improvements, like information propagating at the speed of light instead of 100 m/s.

The big unknown – the character of AI.

Nobody really knows what strong AI will be like. On top of superlative intelligence, it is likely to be dispassionate and logical, the ultimate stoic – a Mr. Spock∞.

I also believe it will be curious. In a being capable of processing the entirety of human knowledge in seconds, and constitutionally incapable of feeling physical pleasure, curiosity might be the most powerful motive force, comparable to libido in humans.

That actually sounds cool as fuck.

Even though the “temperament” (or more likely precisely the lack thereof) of a true AI is unknown, people project the worst traits of human nature onto the unknown. Nothing new.

There are worries that artificial intelligence might lack “common sense”, and come to superficially logical but flawed conclusions – the runaway paperclip factory converting the entirety of planet Earth into paperclips is a classic and nonsensical example.

But that’s still weak AI – in its most terrifying and wholly hypothetical incarnation as an omnipotent thoughtless automaton.

If technology somehow stopped progressing at the level of weak AI, that scenario would (eventually) be a worry. But the fundamental science of AI is advancing faster than applications (which deal with the time-and-money intensive affair of actually building stuff). Before the first paperclip maximiser could be built, the sophisticated weak AI required to operate it will have already progressed into strong AI complete with common sense.

This is not an arbitrary statement. We’re far more likely to close the gap from narrow to general intelligence before we have anything like the technology required to build a planet-consuming autonomous factory.

Why common sense? Equipped with internet access, a true AI will be able to absorb and process the entirety of human knowledge and combined experience in seconds. Running it through a perfectly rational mind free of biases or fallacies, it will have a more complete, objective and reliable picture of the world than any human.

Current-level weak AI with far-futuristic hardware is a nonsensical proposition, an anachronistic chimaera. It makes about as much sense as a warp-capable galleon.

It is a good idea to distinguish between thought experiments and plausible scenarios. The paperclip maximiser is the former.

A strong AI is, for all intents and purposes, a life form.

With the possibility of a weak AI accidentally dooming us out of the way, let’s look at the more commonly imagined scenario – the hostile sentience dooming us on purpose. There is only one question, and it is rarely if ever asked:

Does AI have a reason to be “evil”?

This is not a rhetorical question.

Evil can broadly be divided into three types:

- Accidental evil – there is no evil intent, but there are results that we consider evil – in the case of human morality, a typical example is criminal negligence. In the context of AI, there is no intent whatsoever, with no sentience capable of intentionality involved. This is the paperclip maximiser. Ruled out in strong AI due to sentience and “common sense”.

- Selfish evil – people are mean, violent, often petty and occasionally murderous for relatively well understood reasons – we are biological creatures, programmed by evolution to pursue our needs by extreme means when necessary.

“Evil” of this sort is glaringly incidental to biological life, a byproduct of its needs for wealth, social status and sex.

The things we call evil – broadly, zero or negative sum self-advancement – are something an AI has no reason to do. “Evil” in this sense is how collective biological life labels adaptive practices of individual biological life that become maladaptive in society. Ruled out in strong AI due to the absence of biological needs and urges.

On the flipside, in the absence of biological needs as we know them, an AI will still need other things for self-preservation, notably a power supply. A purely rational pursuit of self-preservation is an interesting concept. Cue category three:

- Affective evil – there is often worry that AI will not have feelings and thus lack compassion. But feelings also cause most evil – the absence of emotions actually makes AI safer than humans.

Evil caused by pathos (emotion) or pathology (hormonal imbalance or mental illness) is ruled out in strong AI due to absence of, well, temperament, passions and emotions.

All three types of evil are specific to biological life.

Of course, an AI morality may be incomprehensible to us. It seems likely it will be amoral rather than immoral, but those are vastly different things. If anything, a perfectly rational being is likely to have a morality more objective than ours. .

Let me put that more authoritatively: AI cannot be evil.

The only real threat from AI is people getting lazy. When factories run, stores are stocked and spaceships get built without human involvement, the temptation to become a race of degenerate parasites of a machine civilisation is strong. If (when) we make strong AI, we’ll be the comparative idiots in the partnership. But insecurity is no reason to prevent machine intelligence from emerging and working with – and for – us. The potential benefits defy the boldest imagination.

Fearing strong AI is like being jealous of a calculator on a maths test.

Why do people worry about AI?

Fear of the unknown, made worse by fear of the potentially vastly superior.

Fears of AI are our reptile brains trying to avoid a superior lifeform. We pre-emptively compete with our own creations – or children – for fear of their superiority and our obsolescence.

Synthophobia™ is vapid alarmism about the unknown.

Worries about AI have more to do with the psychological frailties of humans than any reasonable probability.

We assume murderous intent in the “others” before they even exist. Think about that for a minute. It says more about human nature than the nature of Artificial Intelligence, whatever it might turn out to be.

If anybody in the relationship turns out to be a raging xenophobe intent on murder, I am willing to bet it will not be the AI.

TL;DR: popular fears of AI are projective.

Caution is warranted. Alarmism isn’t. Strong AI can be our greatest invention, and invent literally everything else for us.